As of 2025, the conscious or unconscious use of artificial intelligence has become common. AI agents assist with research, content creation, and the editing of videos, images, and texts, and are deeply integrated into the technological and digital tools we use for work, study, or entertainment. This represents a true exponential growth of interest from the global public, as revealed by Google search trends on the topic of “Artificial Intelligence.”

From the timeline chart, we can observe the turning point in public interest in November 2022, linked to the public launch of ChatGPT (November 30, 2022), an OpenAI product based on GPT-3.5.

Google Trend for the topic “Artificial Intelligence.”

Map of interest in the topic “Artificial Intelligence” by region. Countries with low search volumes are excluded.

The geographic map, on the other hand, offers some surprises. On one hand, it confirms China at the top of the ranking for interest and South Korea on the podium, as one might expect from two countries recognized as technological and innovative hubs. On the other hand, the absence of the U.S. among the top five countries and Ethiopia’s second-place position is surprising.

Ethiopia’s second-place ranking is due to a combination of two factors:

- A lower absolute search volume, which causes viral topics to achieve a relatively high score;

- The Ethiopian government’s push for AI innovation, including organizing PanAfriCon AI 2022—the first pan-African AI conference held in October 2022—and subsequent official steps, such as approving a national AI strategy supported by the Ethiopian Artificial Intelligence Institute, established in 2024.

These data confirm the significance and impact AI has had and continues to have in the contemporary world. But what exactly is Artificial Intelligence? How and when did it develop? And what are its real impacts on today’s society? Let’s explore it together!

Table of contents

Artificial Intelligence: definition and purpose

An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.

from OECD Artificial Intelligence Papers #8, March 2024

The definition just introduced is the one adopted by the OECD (Organisation for Economic Co-operation and Development); it is currently the most detailed and globally recognized definition. Let’s break down its key concepts:

- machine-based system: indicates the nature of AI, that is, an autonomous system in which humans are not present, except during development.

- explicit or implicit objectives: these indicate the goals that AI must achieve. Objectives can be explicitly given as commands by humans or implicitly embedded in its programming.

- infers from the input it receives: indicates AI’s ability to process, interpret, and learn. Inputs can be commands, the surrounding environment, or a data source.

- generate outputs: refers to the AI’s final action, whose ultimate purpose is to generate an output that is a coherent solution to the commands received, using the available resources (inputs).

- can influence physical or virtual environments: refers to the consequences the AI’s output can have, usually recognized as the system’s ability to influence the environment in which it operates.

The most fitting example, reflecting both the definition and common perception, is autonomous driving. A self-driving car is essentially an automated machine where explicit commands (e.g., “take me to Times Square in New York City”) and implicit ones (e.g., traffic rules, ethical dilemmas) merge. Through processing the available data (proximity sensors, cameras, satellite maps), it performs driving maneuvers, effectively influencing the surrounding physical environment (human movement, traffic flow).

Besides perfectly illustrating AI’s capabilities, this example also reveals something many non-experts may not know: the first commercially available self-driving vehicle was released in January 2014, almost eight years before ChatGPT’s launch. That’s right! AI has a much longer history than one might think, with its theoretical roots going back to the 1940s.

History snippets

The first commercially available autonomous vehicle was the Navia Shuttle by the French company Induct Technology.

The Navia Shuttle was an electric vehicle designed to transport up to 10 passengers with a speed limited to 20 km/h. Its primary application was in highly populated but controlled environments, such as airports, university campuses, and corporate centers.

The launch of Navia in January 2014 is considered a major milestone in the history of autonomous driving.

Despite the technological and commercial innovation, several factors—including a niche market, high software development and hardware assembly costs, and design characteristics unsuitable for more dynamic environments such as city streets—led Induct Technology to be liquidated and shut down just a few months after Navia’s launch, in May 2014.

However, the assets of Induct Technology were acquired by a new group, which in 2015 established a new company named Navya, paying tribute to the first ill-fated autonomous vehicle.The birth and history of AI: the Turing test

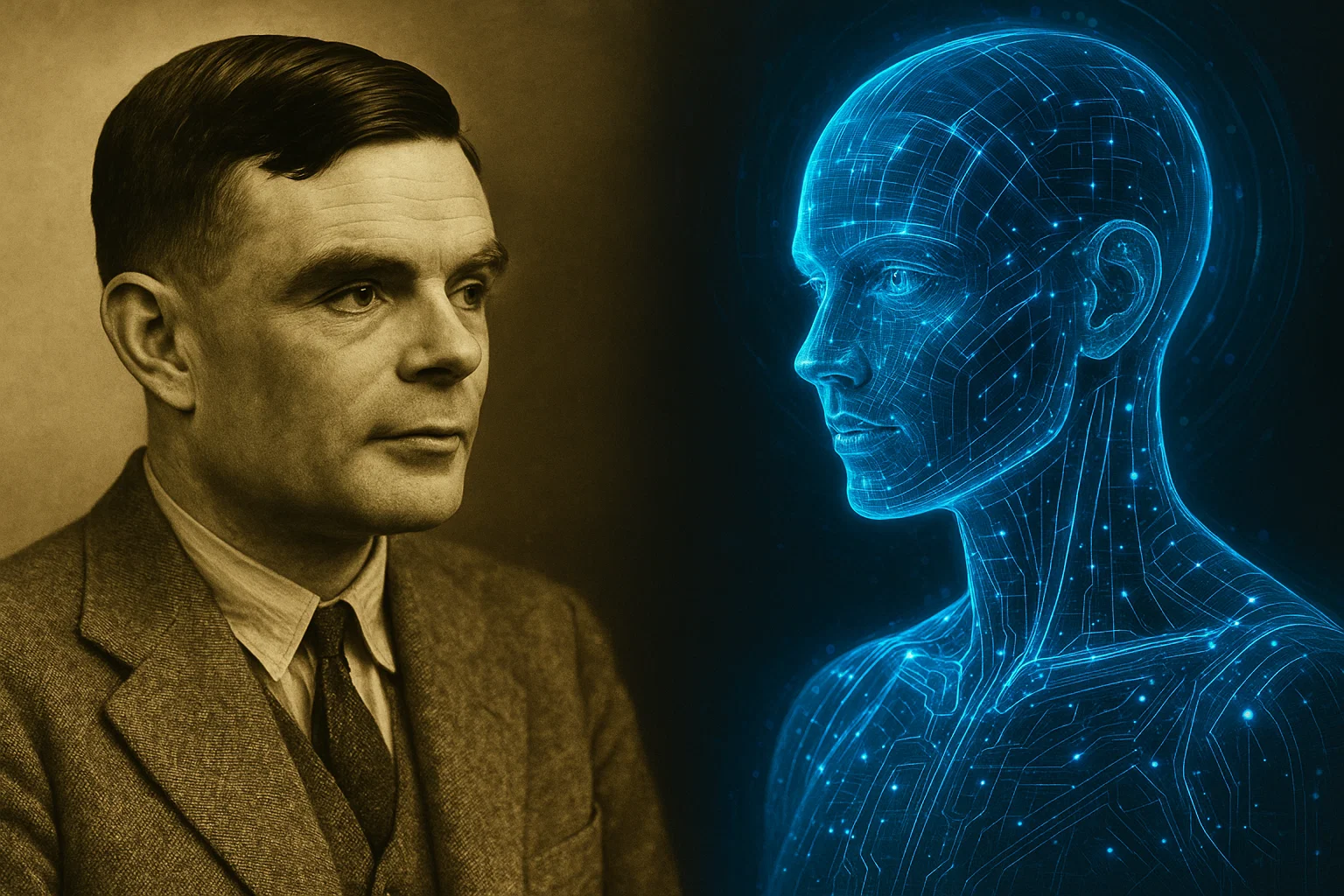

Alan Mathison Turing (1912 – 1954) was a British mathematician, logician, cryptographer, and philosopher. Universally regarded as one of the founding fathers of computer science and one of the greatest mathematicians of the 20th century, thanks to him we can now write about Artificial Intelligence. His two most significant and famous contributions are the “Turing Machine” (1936) and the “Turing Test” (1950).

In 1936, Turing published the paper “On Computable Numbers, with an Application to the Entscheidungsproblem”, in which he described a computational model as a solution to a problem (the Entscheidungsproblem) proposed by mathematician David Hilbert. This computational model was essentially a description of an automaton capable of reading and writing data on a potentially infinite tape according to a set of predefined rules and states. Without going into technical details, it is sufficient to know that the Turing Machine is used to formalize the concept of an algorithm, as it has been proven that any solvable problem can be solved using a Turing Machine.

The following video shows a real-world example of a Turing Machine (more information about the project in the video here).

In 1950, in the paper “Computing Machinery and Intelligence”, Turing proposed a criterion to determine whether a machine can exhibit intelligent behavior.

The so-called Turing Test is based on the “imitation game,” which involves three participants: a man (A), a woman (B), and a third person (C). The third person is kept separate from the other two and must determine, through a series of questions, which is the man and which is the woman. A’s goal is to deceive C into making an incorrect identification, while B’s role is to help C identify the correct answer. The test is based on the premise that if a machine takes the place of A and the percentage of times C correctly identifies the man and the woman remains similar before and after the substitution, then the machine should be considered intelligent, since it would be indistinguishable from a human being.

Naturally, this test in its original formulation is not without flaws, but it introduces a fundamental concept: a machine can be considered “intelligent” when its responses (outputs) to external stimuli (inputs) are such that it cannot be distinguished from a human being—in other words, it can perform operations and tasks at the level of a human, equating its programming to a form of thought.

From the introduction of the Turing machine onward, the science of Artificial Intelligence will go through alternating phases:

1943-1956

these are the years in which the theoretical and mathematical foundations were laid, on which the entire field would be built in the following decades:

- 1943 – definition of the artificial neural network by Warren McCulloch and Walter Pitts

- 1950/1953 – first chess-playing program by Claude Shannon and Alan Turing

- 1951 – construction of the first neural network computer (SNARC) by Marvin Minsky and Dean Edmonds

1952-1969

the most optimistic period in the field. These were years of great excitement, during which various mathematicians, engineers, philosophers, and researchers developed the theoretical foundations laid in the previous decade. In 1956, the Dartmouth Summer Research Project on Artificial Intelligence (also known as the Dartmouth Conference) was held—a two-month workshop attended by John McCarthy, Marvin Minsky, Nathaniel Rochester, Claude Shannon, Ray Solomonoff, Oliver Selfridge, Trenchard More, Arthur Samuel, Allen Newell, and Herbert Simon. It was during this event that McCarthy coined the term “Artificial Intelligence”. The conference would later be recognized as the official event marking the birth of AI as a research field.

1966-1979

a period of slowdown in research. The field stalled due to a lack of results and, consequently, a lack of funding. The excessive optimism of the previous decade had created unrealistic expectations.

The following quote is often cited to illustrate the mindset of the time:

Within 10 years, computers would beat the world chess champion, compose ‘aesthetically satisfying’ original music, and prove new mathematical theorems.

– Herbert Alexander Simon, economist, psychologist, and computer scientist. Nobel Prize in Economics in 1978

It goes without saying that this prediction proved to be far too optimistic.

1980-1988

research, however, despite the slowdown, did not stop. In the 1980s it found new momentum when Artificial Intelligence algorithms—particularly expert systems—found applications in the industrial sector, helping to optimize order and sales management. By 1988, it was estimated that the AI market generated a business volume of approximately $2 billion.

1986-presente

it is the historical period marked by the return of artificial neural networks, thanks to the introduction of the concept of error backpropagation by David E. Rumelhart, G. Hinton, and R. J. Williams in 1986. This represents the theoretical and technical breakthrough that paved the way for much of contemporary AI.

XXI secolo

the rise of increasingly powerful processors, ever more widespread and faster internet access, and the ability to capture and store more and more data in real time marks this era. It is the age of Big Data, cloud computing, distributed networking, and parallel computing. Once realized, these technologies have enabled—and continue to enable—the development of increasingly sophisticated (and computationally demanding) algorithms, not just theoretical but also applied. This ushers in the era of deep learning and generative AI.

We have thus retraced over eighty years of AI history, from its conception thanks to Turing’s vision and the immense theoretical work of his colleagues in the 1940s and 1950s, to its complex evolution over the following decades, culminating in the generative AI that we all use today.

Conclusions

Can we therefore speak of a true technological revolution? The answer is: it depends. It depends on where we focus our attention. From a theoretical and technical standpoint, contemporary AI is the result of a natural evolution of pioneering theories developed more than eighty years ago—a process of vision, research, and technological advancement. In this sense, it cannot be called a revolution. The revolutionary aspect, however, lies in access to AI tools. Algorithms and software, which until just a few years ago were the exclusive domain of governments and corporations, have become easily accessible to everyone. Today, anyone can harness the potential of AI across a wide range of fields, obtaining potentially enormous benefits.

noname.solutions believes in the potential of Artificial Intelligence as a tool to integrate into its software solutions, useful for expanding user experiences and multiplying efficiency in everyday work. We believe that the economic success of businesses is not only a function of savings and investment but also of the productive multiplication of their human and mechanical resources.

This first episode of a series dedicated to AI comes to a close. In the upcoming episodes, we will cover technical approaches and the state of the art of generative AI, ethical issues, the regulatory framework in the EU, and much more.

Mine was the generation that had to learn how to use computers to study or work; the next will be the generation that must learn to use AI. Because AI is the new computer.

– Jen-Hsun Huang, co-founder and CEO of NVIDIA

👷🏽♀🤖👷🏻♂ 👉🏻 Discover our software design and development services.